Deepfake threats soar 1200% as AI scams sweep South Africa

AI

AI

INSURANCE

INSURANCE

BANK

BANK

READ

READ

WOULD

WOULD

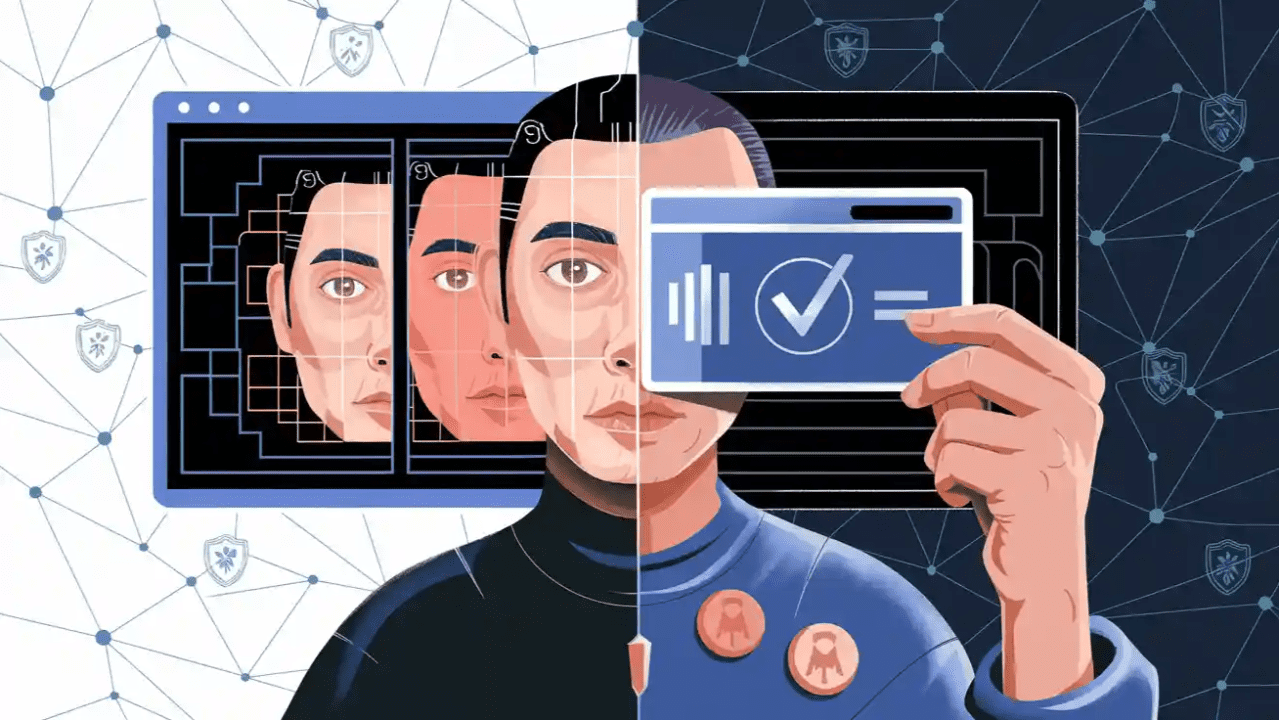

South Africa is witnessing a surge in artificial intelligence (AI) scams and deepfake manipulation, with instances reported to have increased by 1,200% in one year. The growth has affected several major sectors, including banking, financial technology (fintech), insurance, retail, media, and government services.

Deepfakes are pieces of content that have been altered using artificial intelligence (AI) to make them appear realistic. They can be videos, images, or audio files capable of imitating real people by mimicking their faces, voices, and even mannerisms.

They are created based on machine learning, a subset of AI that absorbs thousands of real photos, videos, and audios of an individual to understand how they appear and sound. The computer then applies this information to create new, false content that mimics the original.

For example, a deepfake video can make it appear as though a president has said something they never actually said. Audio deepfakes, on the other hand, can manipulate someone’s voice to deceive others into revealing confidential information. Currently, these fakes are used to spread misinformation, damage reputations, hijack identities, and overcome security measures that rely on facial or voice recognition.

The financial industry has been one of the hardest hit by various scams. Fintech and banking services are facing challenges such as SIM-swap scams, account opening scams, and impersonation. In these scams, AI voice cloning technology is used to replicate the voices of bank staff, tricking victims into disclosing confidential information or approving transactions.

South Africa’s First National Bank (FNB) recently disclosed that a deepfake scam was targeting its customers. Scammers impersonated bank staff and even family members of customers using voice, video, and text messages created through artificial intelligence. Victims were scammed into sending money urgently, and some lost huge amounts before they could appreciate the scam.

Experts link the rise of such fraud to South Africa’s high level of digital connectivity. The country has over 50 million internet subscribers and some 124 million active cell phones. Furthermore, 27 million social media users present an enormous potential market for cyber crooks to prey upon.

Fraud expands beyond banks

This type of AI-enabled crime extends beyond financial institutions; insurance companies have also reported cases of fraudulent claims involving altered videos or forged medical reports. These fraudulent submissions can be very convincing, making it difficult to detect through standard verification processes. As a result, insurers are compelled to invest in new methods to authenticate claims.

Retailers are also affected. Spammers create duplicate online stores or advertising campaigns that appear legitimate. Customers are redirected to these fraudulent websites, where they end up paying for goods that do not exist. This situation impacts both e-commerce sites and physical stores that rely on online ads.

Media outlets have encountered manipulated videos and imitation voices used to spread misinformation and discredit individuals. Similarly, government organisations have faced breaches involving altered documents, as well as counterfeit identification data used to access state records.

The rise in cases of scams has led to improved detection and prevention methods. Real-time anomaly detection systems are being implemented to identify suspicious behaviour. Additionally, biometric verification and liveness detection are now commonly used to confirm the authenticity of faces and voices.

Device fingerprinting helps track suspicious activities across different devices, while behavioural analysis is used to identify fraudulent patterns before they can succeed. Together with public awareness, these measures are considered essential for preventing successful attacks.

Regulation and preparedness

Industry stakeholders are advocating for stricter laws to address the rise of AI-assisted crime. There are calls for legislation that specifically targets the misuse of deepfake technology, along with severe penalties for offenders once apprehended.

Compliance models are being reworked so that AI technology can be incorporated in government regulatory processes. It would create a single uniform way of identifying and prosecuting AI-aided fraud across all sectors.

The government has also identified the arising danger of the misuse of artificial intelligence (AI) and is calling for instant national preparedness.

Governments are collaborating with universities to ensure that engineering students are trained to develop systems capable of identifying deepfakes and preventing AI-enabled cyberattacks. Furthermore, new initiatives will involve social scientists and ethicists to establish guidelines and regulations that prioritize the privacy of citizens and uphold human dignity.

Governments are partnering with universities to ensure that engineering students receive training in developing systems that can identify deepfakes and prevent AI-enabled cyberattacks. Additionally, new initiatives will include collaboration with social scientists and ethicists to create guidelines and regulations that prioritise citizens’ privacy and uphold human dignity.

Banks and financial technology firms must enhance awareness campaigns for consumers. Customers are advised to verify callers, double-check messages, and proceed with caution before approving transactions.

The rapid rise of AI-driven scams indicates that no sector is entirely safe. As internet usage expands, companies and regulators will face increasing challenges in outpacing criminals utilising innovative technologies.

Collaboration among companies, authorities, and scientists is viewed as the solution. By enhancing technology, increasing regulation, and raising customer awareness, South Africa aims to protect users and maintain trust in its online economy.