Implementing decision engines and machine learning models in production

WHEN

WHEN

SECURITY

SECURITY

READ

READ

Bringing machine learning into an operational decision engine means nothing more than accepting the realities of production systems as opposed to treating models as isolated research artefacts.

I think that successful deployment starts by modelling all parts of the solution: data ingestion, feature transformation, model inference, and logging, as one combined workflow. In my case, when I championed a credit-assessment program in a buy-now-pay-later service, my firm’s push of this combined strategy early in the project was the crucial factor in producing a good-quality, low-latency decision system.

Practically, that involved embedding the prediction logic right into the serverless functions, instead of relegating scoring to nightly batch jobs, and the system scaled elastically with the incoming requests just by packaging the model and its dependencies in a lightweight container deployed to a function-as-a-service platform.

All the invocations handled data validation, invoked fraud-detection integrations, and provided a score within fifty milliseconds. This degree of latency reduction meant that end users realised their results immediately without compromising throughput as the transaction volume increased.

It is also important to build transparency into the pipeline. Saving all the data entering the system, snapshots of features, and all the outputs of the predictions in a high-performance NoSQL store made it possible to thoroughly audit everything and quickly fix any problems.

Similar: A chat with Ikram Babs-Lawal about being a machine learning engineer, and co-founder at 14

This type of record-keeping allowed analysts to perform analysis, alter risk thresholds, and perform ongoing feature engineering improvements. Embedding observability at the core, rather than retrofitting logging after deployment, transforms model governance from a compliance checkbox into a strategic advantage.

Automated validation is another cornerstone of robust production systems. Each update to a model went through a CI/CD pipeline, which consisted of regression tests against previous data sets to achieve performance compliance. When rollbacks were needed, they were made easy by containerised releases and eliminated discrepancies between development and live deployment.

This field of discipline has enabled us to react to the changing conditions in the market fast and revise the decision rules as long as the downtime was not extensive and did not involve any involvement of manual labour.

External failures should also be expected by the real-world systems. Connections to identity verification and fraud‑scoring services may periodically become unavailable, and a strict orchestration that waits indefinitely blocks the entire pipeline.

To correct this, the decision engine used conservative default scores when dependencies failed and queued affected records to be reconciled later. That degradation made sure that there was constant service and as few inaccurate decisions as possible during transient disruptions.

Security and compliance considerations must be woven into every layer of the solution. Proper role-based access at the API gateway and data store level meant that only authorised services and personnel were able to view information and edit sensitive data.

The encryption in transit and at rest, along with fine-grained auditing, met both regulatory needs and overall system performance. My experience has shown that the view of security as an end process, instead of an essential principle to be considered at the design phase, reduces operational risk and prevents costly rework.

Cost efficiency rounds out the picture of a mature production deployment. Although serverless compute and managed databases alleviate operational overhead almost entirely, unchecked consumption may make costs even higher.

The constant surveillance of the invocation rates, data throughput, and storage growth initiated automatic scale-down mechanisms during off-peak periods. The combination of observability and cost alerts kept the rate of resource consumption consistent with business predictions without requiring human supervision.

Production of decision engines and machine-learning models requires more than just the mastery of algorithms. It needs a holistic engineering practice that combines containerised model serving, serverless compute, audit-ready data stores, automated validation, and resilient orchestration.

It is only when a team treats deployment as a first-class concern that it will develop intelligent systems that users will trust, withstand real-life failures, and adapt accordingly to rapidly changing requirements.

Read also: How teenage Full-Stack Developer, Hanif Adedotun, wants to solve problems using Machine Learning

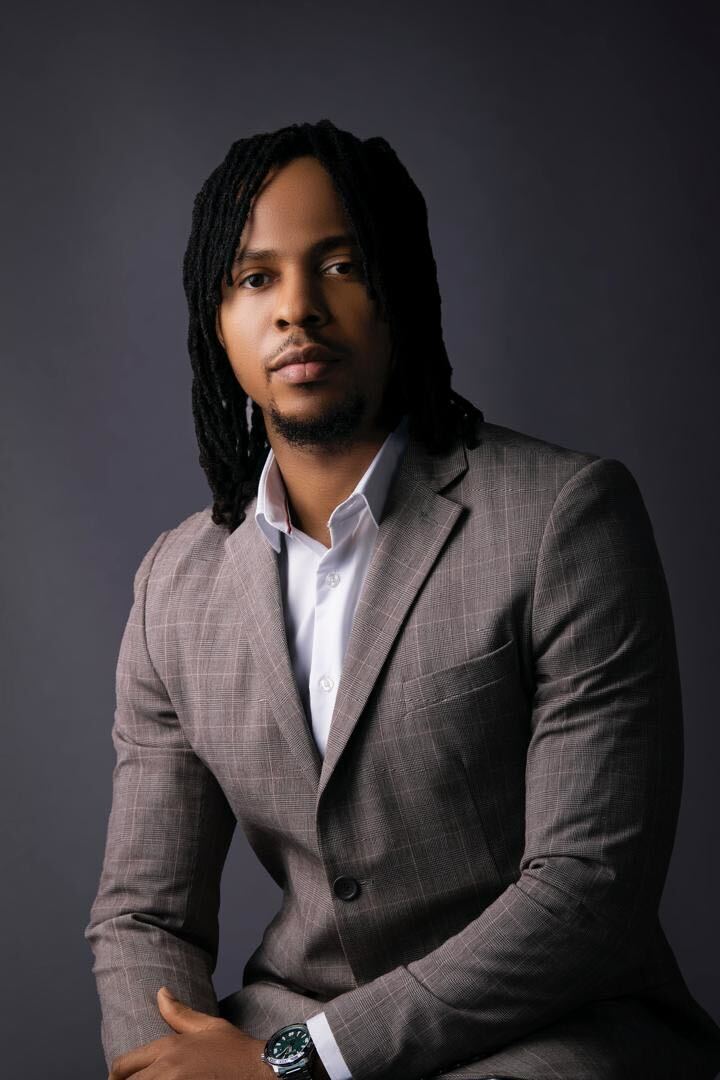

Meet Emmanuel Okorie

Emmanuel Okorie is a data engineer with almost 10 years of experience helping companies build reliable data pipelines and extract insights from complex datasets.

He has worked across various industries and regions, from fintech and e-commerce to health tech and clean energy, with roles in Europe, the Middle East, Africa, and the U.S. Emmanuel is passionate about using data to solve real-world problems and currently focuses on building scalable, cloud-based data systems.