The Bandwidth Economy: Turning Viewers into the Network

AI

AI

WORLD

WORLD

WHEN

WHEN

P2P

P2P

WOULD

WOULD

TL;DR:

- Tayga is a programmable hyper-edge economy: an open, public protocol for programmatic resource discovery that lets applications tap the abundance of digital resources at the edge. The hyper‑edge is the resource layer built on consumer‑device networks.

- Anyone with an internet connection, telcos, DePINs, universities, or individuals can contribute resources on the hyper‑edge. Tayga’s economics reward stable, consistently available capacity, while the protocol handles discovery and matching between demand and supply.

- Tayga’s first application, Rilla, uses AI orchestration to deliver live video directly between peers. The AI adapts in real-time as devices join or drop and conditions fluctuate. Tayga runs incentivized video relays for Rilla, so broadcasters can activate viewers’ devices as passive relays.

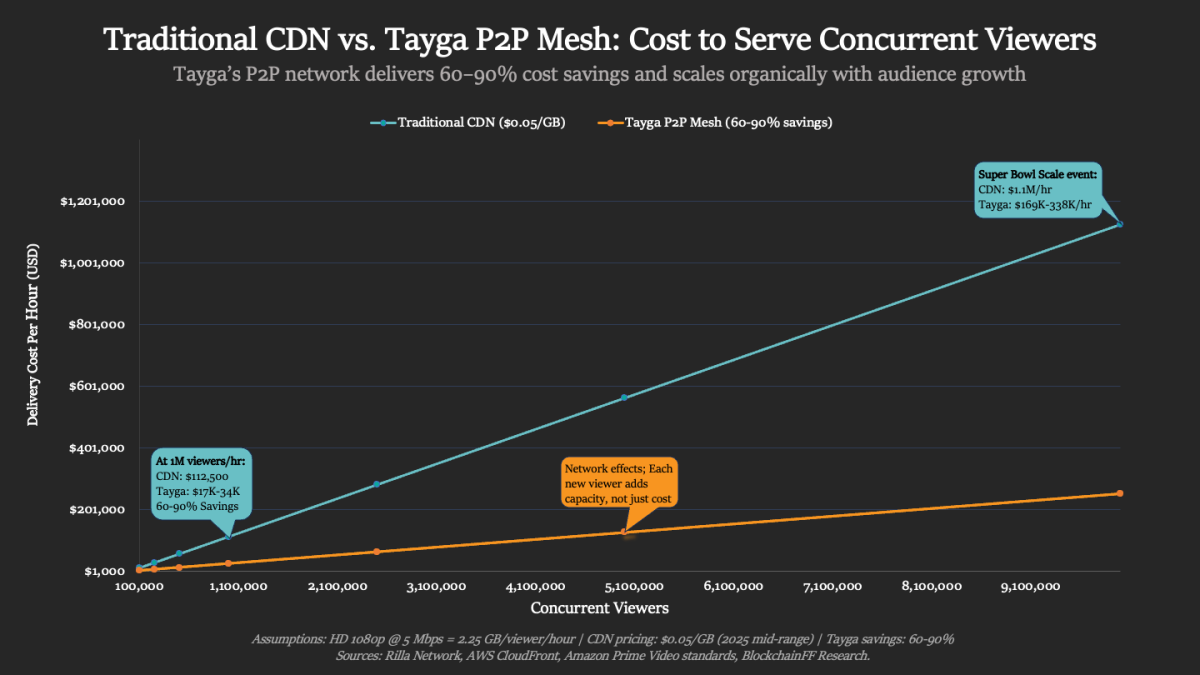

- Augmenting centralized CDNs with this dynamic layer can reduce streaming costs by 60–90% and improve quality and stability, cutting buffering and in‑stream errors.

- CDNs powered the streaming boom by pushing content closer to viewers. But traffic keeps rising, and the limits of that model are showing. The opportunity now is at the hyper‑edge, where local bandwidth, device proximity, and distributed coordination handle surges that traditional networks struggle to reach.

The internet wasn’t built for real-time video. Early networks handled text and static files, not today’s latency-sensitive live streams. Video now dominates global internet traffic, accounting for well over half of downstream usage.

Content Delivery Network (CDN) operators such as Akamai, Cloudflare, Fastly, Amazon (CloudFront), and Google have scaled to meet some of this demand, collectively pushing hundreds of Tbps. Yet demand is outpacing any single provider: used international bandwidth surpassed 6.4 petabytes per second (6,400 Tbps) and is growing roughly 30% annually. At this pace, traffic demand is outstripping what any single provider can supply. CDNs are capital‑intensive and scale costs with traffic; as data demand accelerates, that model looks increasingly hard to sustain.

This widening gap is visible in practice. Major broadcasters spend six, seven, and in some cases eight-figure sums to guarantee streaming reliability, yet buffering and quality drops remain common, especially outside Tier-1 markets. For instance, in January 2024, NBC Peacock’s exclusive NFL Wild Card stream reportedly consumed 30% of U.S. internet traffic at peak, an unprecedented load. Even industry giants recognize that streaming at this scale poses a fundamental challenge, as traditional pipelines strain under unpredictable surges.

Beneath the technical and cost burdens lies a deeper issue: centralized control. A handful of CDN providers carry the vast majority of video traffic, creating points of fragility. When one goes down, the effects ripple globally.

In October 2025, a DNS failure tied to AWS and its DynamoDB endpoints cascaded into a wider cloud outage, disrupting thousands of companies and degrading or interrupting an estimated 20% of global internet traffic for hours. AWS reported full restoration roughly 15 hours after the first errors, but downstream systems took longer to recover, and external analyses pegged the total economic damage running into the billions.

Similarly, in July 2025, Cloudflare had an outage caused by a misconfiguration of its DNS resolver, Akamai's July 2021 outage from a software update, and Fastly's June 2021 outage from a software bug that took down major websites, including Reddit, Spotify, Amazon, the New York Times, and more, completely offline for about an hour.

This incident underscored how much the internet relies on a few intermediaries. A single mistake or outage in a centralized CDN can disrupt content delivery at a continental scale. The outcome is an ecosystem that, while convenient, is brittle and expensive to maintain and is reaching the limits of its economic scalability.

Challenges of Centralized CDNs

Live video delivery over traditional CDNs faces three structural bottlenecks:

High Cost of Scaling: Each additional viewer increases the amount of data the network must send out to users, which drives delivery costs higher. At typical CDN rates (~$0.05 per GB), streaming one HD event to 1M concurrent viewers for an hour moves roughly 4.5 PB of data, costing over $225,000 in CDN bills. For platforms that host multiple large live events, these bandwidth bills quickly escalate into the millions. Bandwidth is often one of the top two expenses (alongside content rights) for streaming services, squeezing margins for publishers.

Geographic Gaps: CDNs concentrate servers in dense, wealthy regions. Audiences in rural areas or emerging markets often sit far from cache nodes. Outside of Tier-1 zones, latency climbs and failure rates increase, resulting in more buffering and dropouts. Even within cities, network congestion can degrade quality. This ‘last mile’ problem means viewers in less-connected regions get a worse experience, and publishers struggle to guarantee consistent QoS globally.

Opaque Attribution: When using a CDN, content providers rent distribution infrastructure rather than owning it. Once a video stream enters the CDN’s network, the content owner loses visibility into how it’s routed and what drives the costs. Billing is often difficult for publishers to understand or verify, and there’s limited transparency or control. Delivery inefficiencies or over-provisioning are inflating their costs. In short, you’re writing large checks to third parties and hoping the service is optimal, without a clear breakdown of value for money.

Layering more servers and bandwidth contracts on top of this model won’t fully solve these issues. Demand for real-time and interactive media is accelerating faster than centralized CDNs can economically scale. A fundamentally different approach is needed, one that taps into resources beyond the few big providers and that aligns the network’s growth with the audience’s growth.

The Rise of DePIN: Infrastructure Without Gatekeepers

A promising alternative has emerged in recent years through Decentralized Physical Infrastructure Networks, or DePIN. The core idea is simple: critical internet resources don’t need to be owned and operated by a few mega-companies. They can be distributed across millions of participants, each contributing what they have and getting rewarded in return.

The first wave of DePIN projects proved this bottom-up model in key domains:

- Wireless coverage: Helium built a community-powered wireless network, rewarding users for running hotspot devices that provide IoT and low-power cellular coverage. It showed that even spectrum-based infrastructure can be crowdsourced at scale.

- Storage: Filecoin (and IPFS) created a marketplace for decentralized data storage, paying individuals and data centers to contribute spare disk space. It demonstrated that cloud storage can be made verifiable and user-provided.

- Compute: Akash and similar networks applied the concept to computing power, allowing anyone with spare servers to offer cloud computing on demand, secured by blockchain contracts.

These projects reduced costs by double-digits compared to traditional providers and, importantly, unlocked coverage in areas that centralized firms found unprofitable. They proved that infrastructure can be built in a bottom-up, market-driven way rather than top-down by incumbents.

However, one resource remained largely untouched: bandwidth. Despite bandwidth accounting for the majority of internet traffic, content delivery is still almost entirely in the hands of centralized CDNs. For DePIN to fully support real-time media such as streaming and two-way interactive content, bandwidth needs to follow the same path as storage, compute, and wireless. This is the opening that Tayga and Rilla are designed to address.

Rewiring Bandwidth with P2P and AI

Rilla treats bandwidth not as a fixed utility rented from a few ISPs or CDNs, but as a programmable asset created by users as a product of their collaboration. It is a real-time peer-to-peer protocol that uses intelligent orchestration to connect a network of everyday devices. By reimagining the content delivery stack, it leverages two key principles: the power of peer-to-peer networks to unlock more bandwidth, and smart orchestration to ensure the network remains reliable.

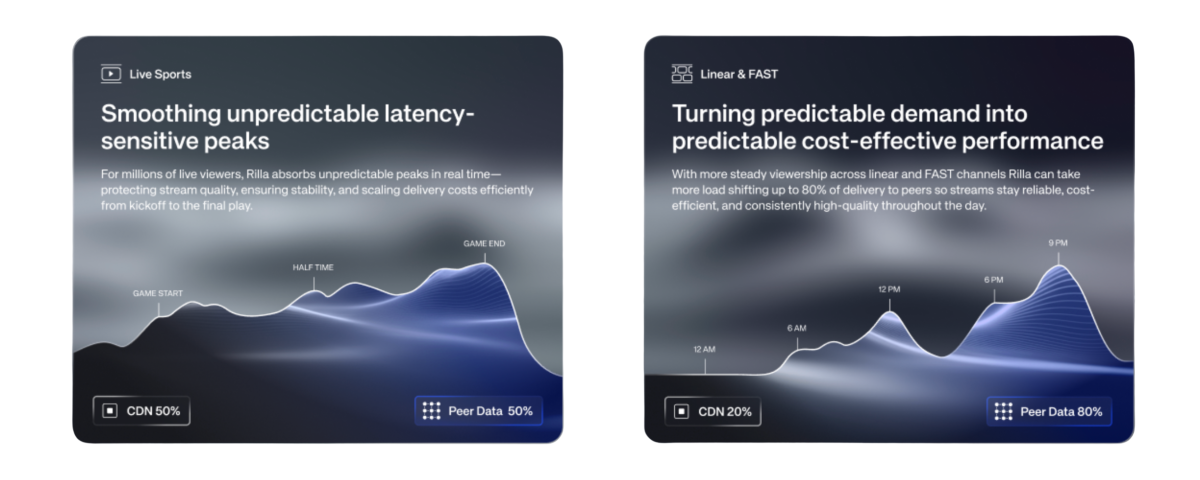

Instead of routing video streams through an origin server and hierarchical caches, Rilla creates a dynamic mesh of viewers, each acting as a passive relay node. When you watch a stream, your device helps deliver it to others. Phones, laptops, smart TVs, and even home routers, any device with an internet connection, can share bandwidth to forward the video to nearby peers.

As the audience grows for an event, so does the network’s delivery capacity. Each new viewer contributes to the network, allowing it to scale organically and locally. In this way, the network expands horizontally, rather than relying on larger data centers.

Each forwarding device earns cryptographic attribution, a verifiable off‑chain proof of relayed units. Smart contracts can then reward contributions (e.g., tokens or credits). This creates a transparent and trust-minimized accounting of who provided what bandwidth, enabling micro-rewards without relying on a central billing server. It’s akin to how Bitcoin nodes verify and reward work done; here, the ‘work’ is delivering content.

Crucially, Rilla’s design embraces the volatility that earlier P2P CDN attempts struggled with. In old peer-to-peer streaming trials, if too many peer nodes left or if demand shifted suddenly, the stream quality would suffer. Rilla avoids this by leveraging an AI coordination engine at its core. An AI coordination engine continuously evaluates bandwidth, latency, buffer position, and capacity, making split-second routing decisions:

- If a device drops or slows, the AI reroutes through alternate peers with minimal disruption.

- When a regional demand spike occurs (say, a sudden surge of viewers in one city), the AI can cherry-pick capacity from Tayga hyper-edge capacity in that region, bolstering the locality of the content availability.

- The AI algorithm optimizes not just for load balancing, but also for latency, quality, and cost. It tries to deliver content from the most optimal source based on the broadcaster’s configuration to achieve the given business outcomes.

By turning churn into resilience, this adaptive routing ensures that even though individual peers are less dependable than a data center, the collective network remains reliable. Every failure (a device leaving) triggers a sub-second automated fix (re-route to others).

In effect, Rilla’s network self-heals in real-time. This was the missing ingredient in older P2P streaming systems like early 2010s ‘mesh CDN’ startups that had the peer-to-peer networks, but lacked the smart coordination to handle real-world chaos and global scale. Rilla’s AI closes that gap.

Why AI Coordination Solves P2P Reliability Issues

Peer-to-peer content delivery isn’t a new concept; with Napster, BitTorrent, and other early experiments like Joost and PPTV, we’ve seen that peers can share bandwidth. But those systems were mostly for file downloads or small-group streams and, in computer science terms, were only "eventually consistent,” meaning that you would get your movie, but just at an unspecified time in the future.

Rilla is built from the ground up to deliver live video with minimum latency, which is to a degree “sequentially consistent,” meaning that you receive the parts of the video in the correct order and within a given time window. This results in the ability to stream live sports at a massive scale at a fraction of the cost.

Earlier P2P CDNs buckled under the stress of live broadcasts because they couldn’t guarantee stable delivery when thousands of nodes were constantly joining or leaving. The most visible example was Joost, the P2P video platform founded by the creators of Skype, which collapsed after its mesh network failed to deliver stable live streams at scale due to peer churn and bandwidth inconsistency.

The introduction of AI in Rilla is a game-changer for P2P reliability. Think of it as a traffic cop plus an air traffic controller rolled into one, powered by real-time data:

- Real-Time Monitoring: The system measures throughput and ping times between peers continuously. It knows which connections are strong or weak at any given millisecond.

- Predictive Adjustment: Using these data streams, the AI predicts where bottlenecks might occur and proactively shifts traffic. If one route starts lagging, Rilla preemptively duplicates or redirects the stream to a more optimal path.

- Load Smoothing: During sudden spikes (e.g., a goal scored in a football match causing a surge of viewers), Rilla organically scales capacity as viewers join a stream. Each new viewer brings additional capacity to the network, enabling Rilla to establish optimal peer relationships and ensure continuity of service.

Every unit of video forwarded is also tied to a cryptographic proof that it was delivered, building a verifiable ledger of service. This not only enables fair rewards but also provides accountability. If a user tries to cheat or not deliver, it’s detectable, and the network can route around them.

By combining adaptive AI coordination with on-chain verification, Rilla effectively converts the volatility of peer-to-peer into a strength. The more the crowd participates, the more robust and cost-efficient the network becomes. Older P2P models failed at scale because they treated volatility as a problem; Rilla treats it as just another variable to manage in a multi-dimensional optimization space.

This allows peer-to-peer video delivery to scale reliably for the first time, shifting bandwidth from a rented service provided by a few companies into a shared resource provided by the community.

The Three Layers of Tayga's Programmable Hyper-Edge Economy

In the context of Rilla and content delivery, Tayga reframes bandwidth as programmable and ephemeral infrastructure rather than a rented and static service. Its architecture has three layers that turn everyday devices into a programmable hyper-edge economy:

Edge Resource Discovery identifies and connects applications to optimal edge resources against cost, performance, and policy objectives.

Verifiable Delivery records the use of those resources as compact, auditable proofs, ensuring transparent accountability without exposing underlying data.

Incentivized Performance aligns economic reward with verified contribution, creating a self-optimizing ecosystem that encourages reliability, efficiency, and long-term participation.

Tayga and Rilla are the first steps towards what the team calls the ‘Open Edge.’ Just as cloud computing opened servers to be rented on demand, the Open Edge opens bandwidth, storage, and compute as a resource that anyone can contribute to or draw from, on an open and public protocol with auditability and transparent rules and behaviors.

Traditional CDNs pre‑provision capacity in fixed locations at largely static prices. Rilla’s network is elastic and market-driven; capacity appears exactly where viewers are, when they need it, driven by incentives rather than centralized planning.

Bandwidth as the Next DePIN Frontier

Global spending on CDN services is already on the order of tens of billions per year and climbing rapidly with streaming demand. In fact, the CDN market is projected to reach $33B in 2025, and is on track to more than double over the next decade. This growth is fueled by our insatiable appetite for video content. According to Cisco’s widely cited forecasts, online video makes up 82% of all internet traffic. A level that is expected to increase with the rise of ultra-high-definition streams and immersive media.

Not only is most internet traffic video, but a rising share of that video is live or time-sensitive. In the U.S., streaming has overtaken broadcast TV in total viewership. In June 2025, streaming comprised 46% of all TV viewing, eclipsing cable and broadcast combined (Nielsen Gauge). Globally, live streaming of sports, esports, and concerts is surging, driving intense periodic spikes in traffic. Ericsson’s Mobility Report in June 2025 highlighted that global mobile data traffic is growing at about 17% CAGR through 2030, with video (especially live video) being the dominant driver.

Mobile users today consume around 33 exabytes of data per day in aggregate, averaging 4.2 GB per person, a staggering figure underpinned largely by video apps.

Sandvine’s 2024 Global Internet Phenomena report further highlights that a handful of major platforms (Google’s YouTube, Netflix, Meta’s apps, etc.) account for roughly 65–70% of all internet traffic by volume. These ‘mega-flows’ are mostly video streams, illustrating how a few services drive the lion’s share of bandwidth use.

The upshot is that content delivery costs and constraints are now front-and-center for the biggest names in tech and media. When a single streaming event can seize a third of a country’s internet capacity (as with Peacock’s NFL game) and when bandwidth fees comprise one of the largest expenses on a content provider’s P&L, the incentive to find new solutions is enormous.

Traditional CDNs remain essential for baseline delivery, but they are over-provisioned on ordinary days and under-provisioned during flash crowds. Providers like Comcast have been testing stopgap measures (e.g. new low-latency congestion protocols, edge caching upgrades), yet even they acknowledge that unprecedented streaming scales require rethinking the approach.

This is where Rilla stands out. By its very nature, a peer-to-peer network self-scales in tandem with demand. When a stream goes viral in a region, that same surge in viewers brings an equivalent surge in local capacity (since each viewer’s device adds a node).

The network expands exactly where the audience is, mirroring geographic demand patterns organically. In effect, Rilla can absorb viral spikes that would overwhelm static CDNs, and it does so without significant upfront capital costs; there’s no need to build out new data centers in anticipation of traffic that might only materialize for a one-off event.

Two converging trends make Rilla’s approach especially timely:

- Live content is exploding: A growing share of video traffic comes from live events or “appointment viewing” online. Whether it’s the FIFA World Cup, a global esports tournament, or a famous musician’s livestreamed concert, these events create massive localized surges of traffic for a few hours or days. Centralized networks struggle to handle these transient peaks efficiently. Rilla’s peer mesh excels in precisely these scenarios by leveraging crowd resources in the moment.

- Efficiency at scale pays off: Because video constitutes such a dominant portion of internet data, even modest percentage gains in delivery efficiency translate to significant savings in bandwidth. If global video delivery were 10% more efficient, that could free up exabytes of data transfer per month. A 60–90% cost cut isn’t incremental; it can save majors tens of millions or open markets previously too costly to serve.

Recruiting upstream bandwidth from nearby peers (instead of distant CDNs), and rewarding contributors directly, and dynamically stabilizing streams via AI, offers a path to scale live video without the usual trade-offs of huge infrastructure investment or compromised quality.

In essence, it turns the streaming infrastructure into something that pays for itself through community participation, rather than something you perpetually pay others for. This flips the script on the traditional CDN business model.

The Team Behind Tayga

Tayga’s leadership combines systems engineering depth, protocol design experience, and long-running commercial execution across media and real-time infrastructure. The group covers the full stack from low-latency coordination layers to high-value industry relationships, giving Tayga both technical credibility and a direct path into large media networks.

Founder & CEO Hal Smith Stevens is a systems engineer who has spent his career building infrastructure across demanding environments. His work spans AI systems used in emergency rooms in the United States to coordination platforms for air traffic operations across Australasia. Hal later co-founded Altered State Machine, whose tokenized AI models earned integrations with global brands, including FIFA and Authentic Brands Group for the Muhammad Ali estate.

Chief Technology Officer Pulasthi Bandara is a computer scientist with experience across AI, natural language processing, computer vision, and decentralized network architecture. A former CIMA exam finalist, he previously led engineering at Altered State Machine, where he worked on production-scale data systems, application layers, and full-stack infrastructure. At Tayga Labs, he directs the implementation of the AI coordination logic and the cryptographic attribution layers that anchor the protocol.

Chief Commercial Officer Justin Tomlinson is a senior media and product executive with more than twenty years of experience in international broadcasting and digital entertainment. As Director of Technology and Product at Sky Europe within Comcast, he oversaw major digital transformations in live sports, on-demand platforms, and large audience experiences across Europe.

Infrastructure That Pays Back

Rilla and its underpinning Tayga protocol demonstrate that bandwidth can be treated much like storage or compute in the decentralized context, programmable, verifiable, and user-provided. In practice, Rilla shows this through live video, one of the most demanding online use cases. By proving out live streaming, the door opens to apply the model to many other domains (edge AI training and inference, game streaming, large file distribution, and software updates).

The broader idea of the ‘Open Edge’ is that internet infrastructure can emerge dynamically from the devices we already use every day. Instead of capacity being locked in faraway data centers, delivery becomes local, adaptive, and shaped by the communities consuming the content. It’s a shift that turns the economics of the internet inside out, rather than value flowing inwards to the major CDN providers, value flows outward to the network participants, that’s us, the communities around our favourite content.

As Hal Smith Stevens argues, the internet was originally designed to be peer-to-peer; if two computers are directly connected, the bandwidth between them is a product of their collaboration, not something that should be tolled by a third party. Rilla essentially operationalizes that philosophy: it uses modern AI/crypto tools to enable all of us to share our connections in a trustworthy way, at scale.

If successful, the implications are far-reaching. Imagine a future where streaming a World Cup final to 100M people doesn’t require a massive overbuild of servers, but instead leverages the idle bandwidth of those very viewers’ neighborhoods, cutting costs by an order of magnitude and making outages nearly a thing of the past. Or consider remote regions being able to watch events in high quality because the network auto-organizes whatever connectivity is present, rather than buffering endlessly due to distant, cold, or overloaded CDN nodes.

Rilla is still early in this journey, but is already working with media giants globally. It represents a compelling rethinking of how we deliver content in the 21st century. It’s an approach where infrastructure “pays back” by rewarding those who help run it, and where scaling is limited only by people’s willingness to participate. Given traffic trajectories and today’s streaming pain points, the approach feels timely.

If you’re interested in exploring the details behind this approach and its implications for streaming and beyond, you can learn more here.